After Covid-19, I committed my life to making pandemics history. In my role at the UN Special Envoy’s office on community health across Africa, I had already been deeply primed for the horrific violence of infectious diseases during the 2014 Ebola outbreak. But the Covid pandemic laid bare humanity’s incapacity for outbreak response at a global scale and the profound loss of control that ensued. This experience — as I battled to scale global vaccine manufacturing at Novavax, always one step behind the virus — drove my pivot from global health to biosecurity, to prevent pandemics before they begin.

I believe in technology’s power to help us end pandemics. Still, since founding Sentinel two years ago, I’ve confronted the increasingly powerful convergence of AI and synthetic biology with vigilance. Despite firmly believing in technology’s role in making pandemics history, the current trajectory of AI triggers the same loss-of-control feeling as Covid-19. What happens when AI agents — easy entry points for non-experts — integrate with sophisticated biodesign tools? Will the democratization of biology enable bioterrorists to engineer pathogens with enhanced transmissibility, virulence, or immune evasion, long before we have the defenses to take pandemics off the table?

These questions keep me up at night — and also galvanize me to action, seeking to address deficits in capital and engagement. We’re one of just two philanthropic organizations deploying at least $10M per year in the biotech governance space — a pittance compared to the estimated $9-15B in climate philanthropy in 2024 alone. The consequence of this funding gap is that fewer than 100 people worldwide work full-time on directly tackling the challenge of preventing advanced biotechnology misuse.

I know biotech governance is tractable: our partners are making AI models safer and implementing biosecurity screening for synthetic nucleic acids. In just two years, efforts from the biosecurity community and synthesis industry have increased screening among gene synthesis providers by 180%.

The window to get ahead of these risks is narrow; perhaps a matter of years, not decades. The pace of technological advancement isn’t slowing down — we’re looking ahead at the development of an interconnected tech stack of AI agents, biological tools, and outsourced manufacturing that will dramatically lower barriers to entry.

We hope we can scale the required work fast enough to meet the moment — we need to at least double the people working on this issue and rapidly grow the funding available. We are confident that we can responsibly deploy $75M+ over the next three to five years toward biotech governance to prevent misuse until the defenses come online and pandemic-proof the world. Through this work, we can accelerate the critical work of biotech governance, expand screening infrastructure, and build the safeguards that will prevent catastrophe.

We’re deeply grateful to everyone who shares our commitment to making pandemics history and to the partners in governments, non-profits, and companies around the world whose dedication makes this work possible.

Impact to date

As I reflect on Sentinel’s first two years, it’s encouraging to see the hard work of our partners and the real momentum building across the biosecurity field. We set ourselves a clear win condition: in our lifetime, no malicious or reckless actor uses advanced biotechnologies to create and release pandemic pathogens. We’re constantly sharpening our theory of victory to get there. Our initial focus areas — AI-enabled biosecurity risks and nucleic acid synthesis screening — are gaining traction thanks to the work of researchers, advocates, and policymakers worldwide. Governments are creating policies; companies are adopting safeguards; the work of our partners is paying off.

Safeguarding biological AI models

In our AI-biosecurity program, we’ve invested heavily in mapping the landscape to distinguish between imminent and long-term challenges and prioritize accordingly.

SecureBio’s Virology Capabilities Test, which Sentinel funded, shows how frontier LLMs can now outperform expert virologists, underscoring the growing risks of democratizing biotechnology. This evidence prompted companies to add safeguards to their most powerful models. For narrower biological design tools, we commissioned Epoch to document that only 3% of over 300 models had any safeguards as of early 2025. Our partnership with CLTR and RAND revealed that 61.5% of the highest-risk frontier models are open source, with code, weights, and training data publicly available. This is concerning because open-source models with publicly available weights and data can be accessed and modified by malicious actors to bypass safeguards designed to prevent misuse.

In 2025, we also moved beyond assessing risks in the digital realm to understanding how AI lowers barriers in the real world. Early this year, we funded the largest randomized controlled trial of AI uplift, testing whether LLMs can meaningfully assist novices in executing complex laboratory protocols. This study generated crucial evidence on whether — and under what conditions — AI models could soon expand the pool of actors capable of developing pandemic pathogens.

In 2026, we’ll expand beyond assessing AI-Bio risk to pressure-testing the feasibility of model-based mitigations for biological tools. We’ve already begun pilot efforts, such as our partnership with CEPI to explore managed-access protocols for their vaccine design models. While similar methods have proven effective on language models like ChatGPT (preventing them from assisting would-be bioterrorists), they haven’t yet been sufficiently tested on specialized biological design models that could enable the design of pandemic pathogens. And critically, we must assess how much safeguarding some models matters when bad actors may be able to use unsafeguarded alternatives instead.

As we test the feasibility of model-based safeguards, we’ll need governments to create the conditions for their widespread adoption. Work in this field has led to initial progress — with briefings from our partners resulting in concrete policy outcomes in both the UK and the EU — and I expect a sustained effort to cement these mitigations on the global policy agenda. This will be a tough battle, but one that I see no choice but to embark on.

Preventing bad actors from buying hazardous DNA online

In our DNA security work, we’re focusing relentlessly on improving two key indicators of progress towards our victory condition: the prevalence and quality of biosecurity screening conducted by DNA providers worldwide. Our ambitious goal is to have at least 80% of companies conduct high-quality screening to prevent the misuse of synthetic DNA by 2030; our threat modelling suggests that we need to reach this threshold to create substantial to illicit acquisition of concerning sequences. Thanks to our partners, we have made significant progress: a 180% increase in gene synthesis screening over the past two years that gives me tremendous hope.

This progress has largely been driven by a shifting policy landscape. In the U.S., which accounts for 25% of all gene synthesis companies globally, two consecutive presidential administrations have catalyzed increased adoption by emphasizing DNA security in executive orders, the AI Action Plan, and official R&D budget priorities. Efforts from civil society played a key role in making this issue an executive priority — including the Nuclear Threat Initiative’s work to establish interventions to reduce global biological risks, the Johns Hopkins Center for Health Security’s work on U.S. biosecurity policy, and red-teaming efforts by groups like the Engineering Biology Research Consortium and SecureDNA.

But we need to go further. Executive action needs to be complemented by legislation to ensure enforceable screening across the private sector. Fortunately, there is now a broad coalition of stakeholders — including the DNA synthesis industry, frontier AI companies, and the leading America-first think tank — whose advocacy we’re confident will translate to legislative action in 2026.

Last December marked a major policy milestone for the field, as the EU Commission unveiled a proposal for the flagship EU Biotech Act: mandatory DNA screening across Europe, representing 11% of gene synthesis companies. This is an extremely ambitious policy with teeth, including articles on enforcement, auditing, and penalties for non-compliance, which aggressively pushes towards our win condition. If Parliament and Council pass this proposal in 2026, this would represent one of the biggest policy wins for biotech governance in a decade.

We’re proud of this progress, driven by mission-driven efforts inside and outside of government worldwide. We see ourselves as “humanity’s directly responsible individuals” for the orphan issue of DNA security. Sentinel provided more than 70% of the philanthropic funding to this field in 2025, and we’re partnering with most, if not all, of the two dozen people in the world who work full-time on this problem. We are confident we wouldn’t have made this progress without our small but mighty coalition.

2025 Learnings

Pressure-testing our own strategy

A critical ingredient in Sentinel’s impact is being relentlessly truth-seeking, transparent, and nimble. We prioritize doing critical in-house research to honestly assess and update our strategy — to be productively paranoid about avoiding path-dependencies. Sentinel doesn’t exist to make grants to ‘the field’; we’re here to solve the problem.

In Q3-4, we did a research sprint to answer:

- If we’re successful, how much would synthesis screening reduce bioterrorism risks?

- Are any other physical materials even better chokepoints than synthetic DNA?

After two quarters of intensive research — supported by a dedicated team of research contractors — we learned that:

- Preventing the misuse of synthetic nucleic acids won’t stop every attack, but achieving our win condition of >80% global screening coverage would meaningfully reduce the risk of engineered pandemics by raising significant barriers to non-state actors. We came away with even more conviction that this work is worth the investment.

- Having evaluated >200 alternative chokepoints, from laboratory equipment to biological reagents, nothing stands out as clearly superior to synthetic DNA in terms of creating access controls to prevent bioterrorism.

- That said, we identified a few chokepoints that could be nearly as promising as DNA synthesis security, including commercial live pathogen repositories, contract research organizations, and cloud labs. We’re currently assessing whether these should become a focus area for us.

Plans for 2026 and Beyond

As we wrote last year, Sentinel will remain laser-focused on preventing the accelerating risks from the convergence of AI and biology. We see prevention efforts as both massively neglected (with <$50M invested per year, and fewer than 100 people full-time working on these issues), and hugely cost-effective, with millions invested in prevention policy offsetting billions in economic risk and millions of lives saved. Of course, we are also heartened to work alongside excellent partners solving other aspects of the layered defense needed to eliminate pandemics — from early warning systems to catch pandemics early and countermeasures like next-generation PPE to protect critical workers during an outbreak.

To achieve our win conditions — securing the highest-risk biological AI models, agents, and data and safeguarding synthetic DNA — we estimate we’ll need to raise and deploy $75M+ over the next 3-5 years, starting with $15-20M in 2026.

We’re also building out our tiger team: Dr. Toby Webster joins us from RAND to lead our AI-Bio program area, where he will focus on mitigations for biological AI models. I’m extremely excited to welcome Toby to the team and confident he will help accelerate our future progress.

AI-Bio: Securing Closed-Source Models and High-Risk Data

In 2026, we’ll further sharpen our AI-Bio win condition, defining specific goals around: 1) securing closed-source biological AI models, 2) protecting high-risk biological datasets that enable fine-tuning of open-source models, and 3) safeguarding AI agent interactions with biological AI models.

A centerpiece of our 2026 AI Bio work will be creating a research agenda to test the feasibility of model-based mitigations and data safeguards, which is especially important in the face of proliferating open-source models. If our research agenda is promising and we move past critical ‘go/no-go’ thresholds, we’ll direct resources to support mitigation development, testing, and adoption.

AI capabilities are advancing faster than our ability to safeguard them. We need additional talent across the AI-Bio ecosystem — people who can rapidly assess new risks, design and test such mitigations, and translate research findings into policy action in the most important jurisdictions.

DNA Synthesis Security: Implementation and Geographic Expansion

In 2026, Sentinel will focus on three areas within DNA synthesis security: 1) building the infrastructure to implement imminent screening mandates, 2) solving persistent technical challenges, and 3) moving the Overton window in key geographies.

In the jurisdictions with momentum towards mandatory screening — the EU, US, and UK — we’ll work with government, non-profit, and industry partners to ensure that any new policies are effectively implemented. This means piloting functions that governments should eventually perform, such as auditing and data aggregation to track suspicious patterns across the industry. These initiatives are highly operational, and we expect to spend significant time recruiting founders to spearhead new programs and organizations.

As the field makes progress on screening uptake, we also need to enable high-quality screening. This involves refining efficient and robust approaches to screening against AI-obscured sequences and shorter DNA fragments, as well as securing tabletop synthesis devices.

Finally, we must make progress in regions where our theory of victory is less clear. China, which accounts for ~34% of DNA providers globally, looms especially large, and it will be critical for us to find and empower the right partners to make progress in this domain.

Our victory condition is extremely ambitious, and the default trajectory still falls short. But the path forward is clearer than ever, and the political will is finally catching up to the technological reality. Achieving our victory condition within five years is possible — but it will take our continued investment and the hard work of our partners to sustain our current momentum.

Looking forward

Six years ago, I watched the world lose control to a natural pandemic. Today, we face a different kind of race — one where the tools to engineer catastrophe are advancing faster than our safeguards.

But here’s what gives me hope: In just two years, we’ve proven that biotech governance is tractable. Working alongside partners across the biosecurity community, we’ve moved the needle on DNA screening and seen major policy shifts across key governments.

The window to get ahead of AI-biology convergence risks is narrow — but it’s still open. Continuing our joint efforts with our incredible partners in this ecosystem, we can build the infrastructure, develop the technical solutions, and create the policy frameworks that will make engineered pandemics as preventable as they are terrifying. Thank you for believing in this mission when the stakes couldn’t be higher. Claire Qureshi.

Appendix

Sentinel’s 2025 grantmaking

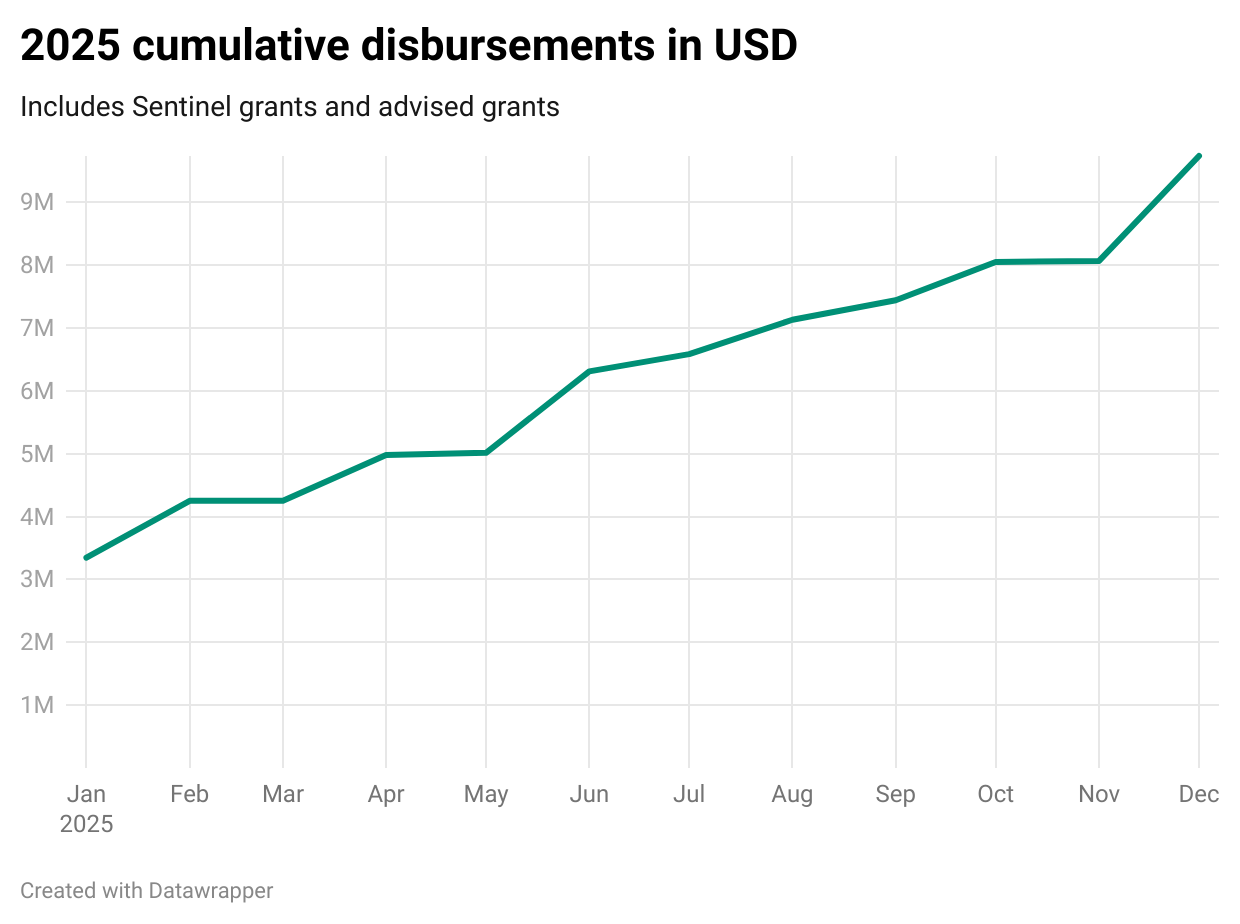

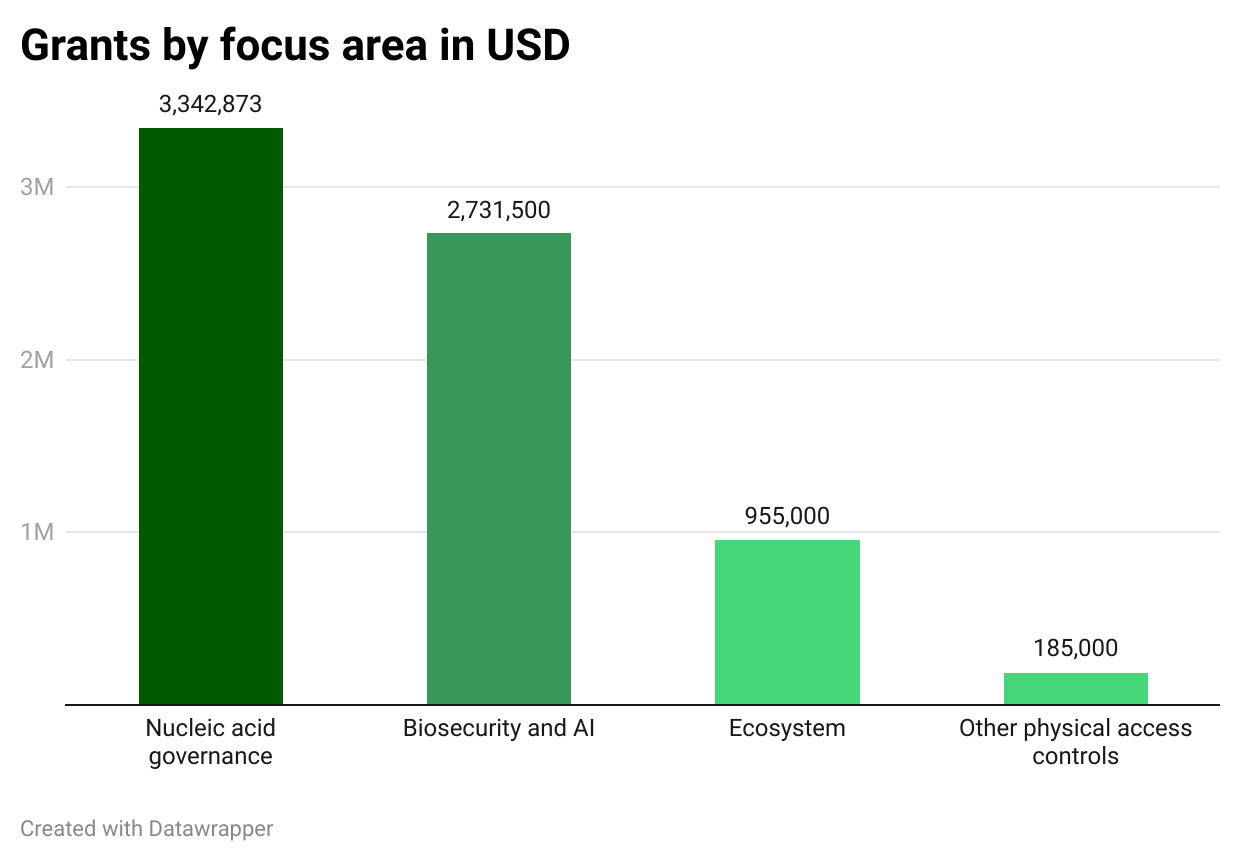

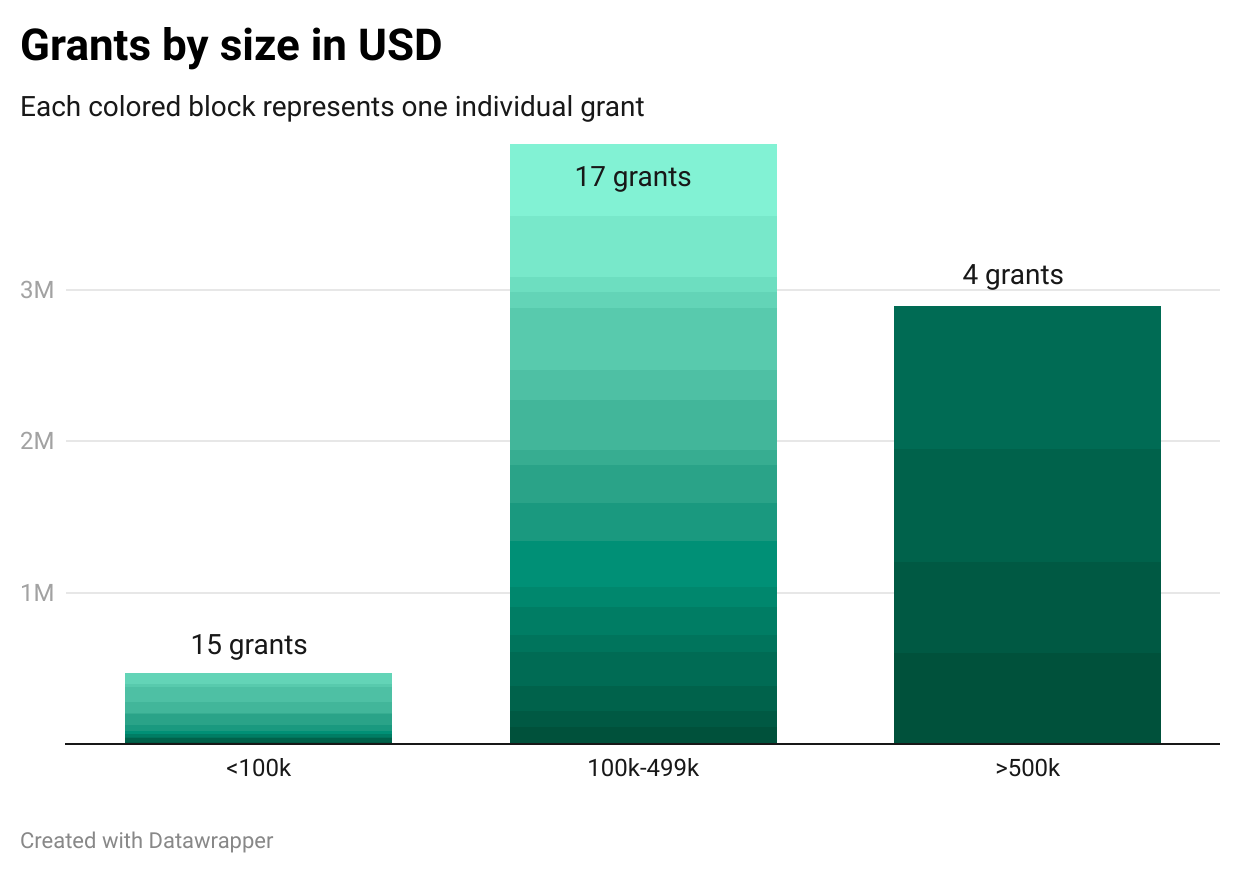

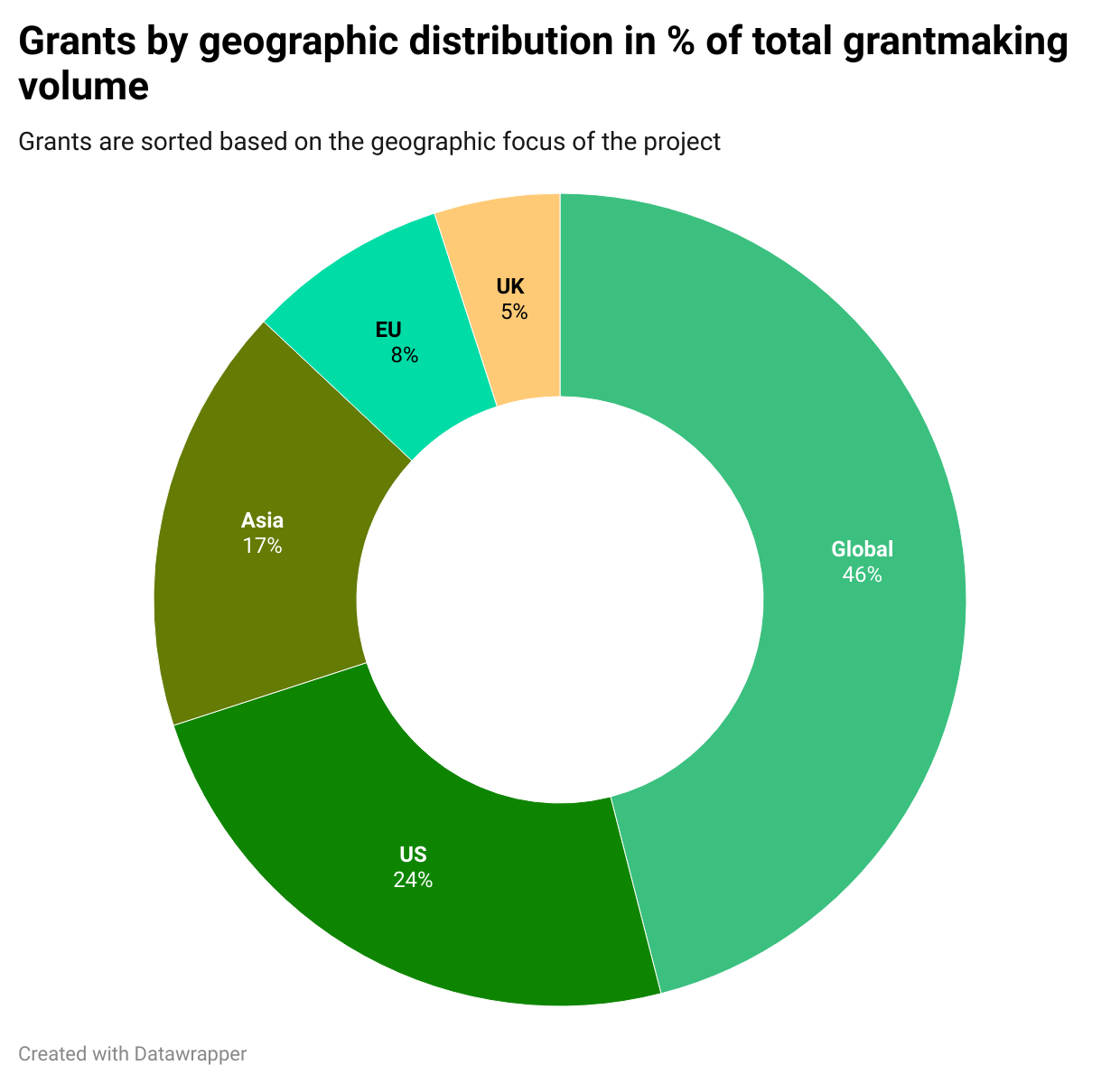

In 2025, we made 36 grants totaling $7.2M (64% Sentinel, 36% advised). In line with our two focus areas, we allocated $3.3M to DNA synthesis governance, $2.7M to AI-Biosecurity, and the remainder to ecosystem building, chokepoints, and countermeasures. Most projects had a global focus (46%), followed by the U.S. (24%), Asia (17%), the EU (8%), and the UK (5%). We include detailed visualizations below, including the cumulative disbursements and grant size distribution.

Of these 36 grants, 28 (~80%) were thesis-driven: When we identify a gap, we develop a thesis for how to address it, find the right partners to execute, and often manage projects closely through completion.

The advised portion of our grantmaking reflects close collaboration with other funders to direct resources toward high-impact projects. We’ve also brought funders with diverse worldviews into the biosecurity space, expanding the coalition around AI-biology risks. Today, more than 20 funders support Sentinel, proving that preventing catastrophic biorisks is a shared priority.